In the age of powerful AI systems, one truth has become clear: it’s not just about having the smartest model — it’s about feeding it the right information in the right way. This is where Context Engineering steps in — a discipline that’s rapidly becoming essential for anyone working with language models, AI agents, or intelligent automation.

What Is Context Engineering?

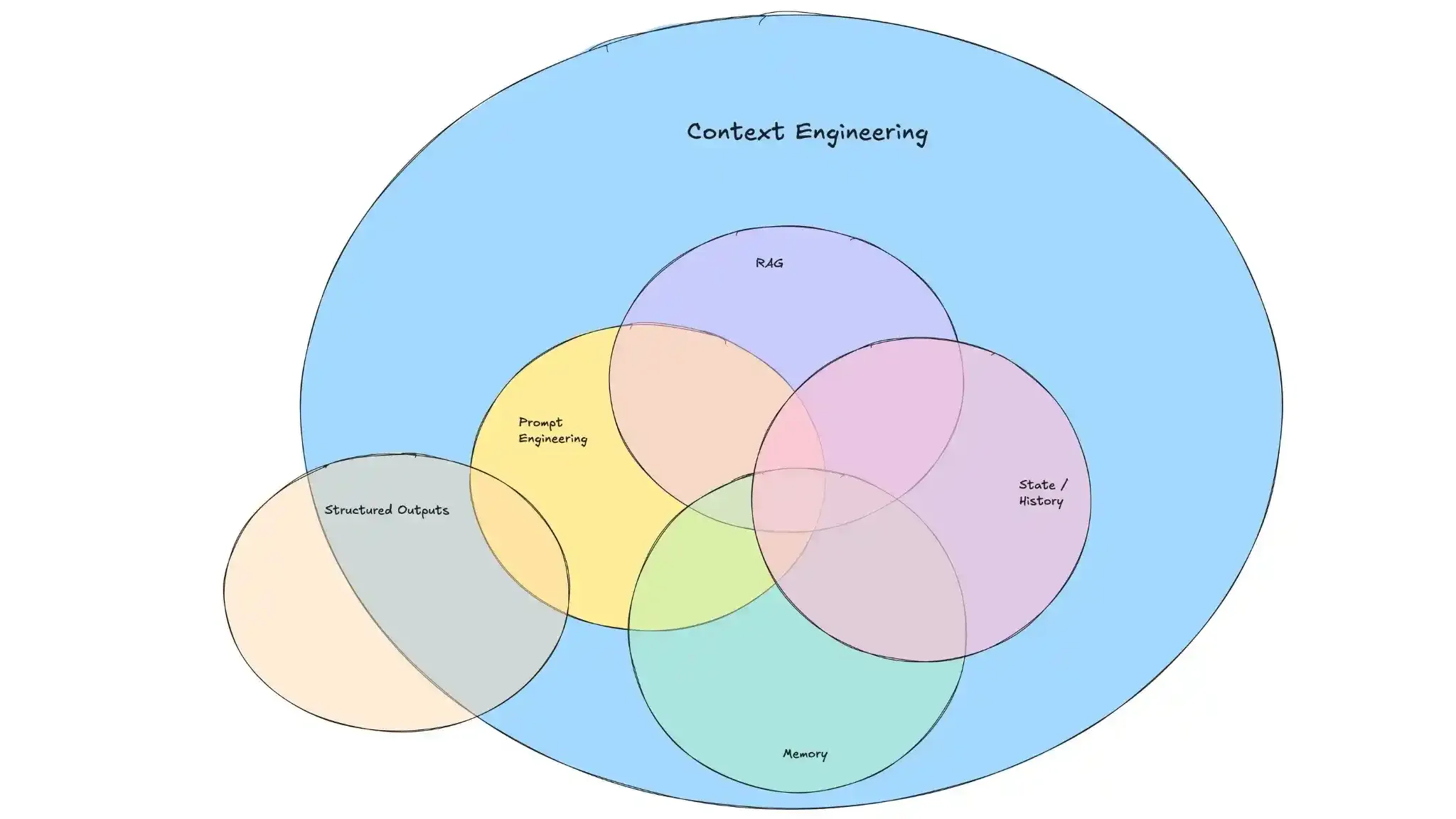

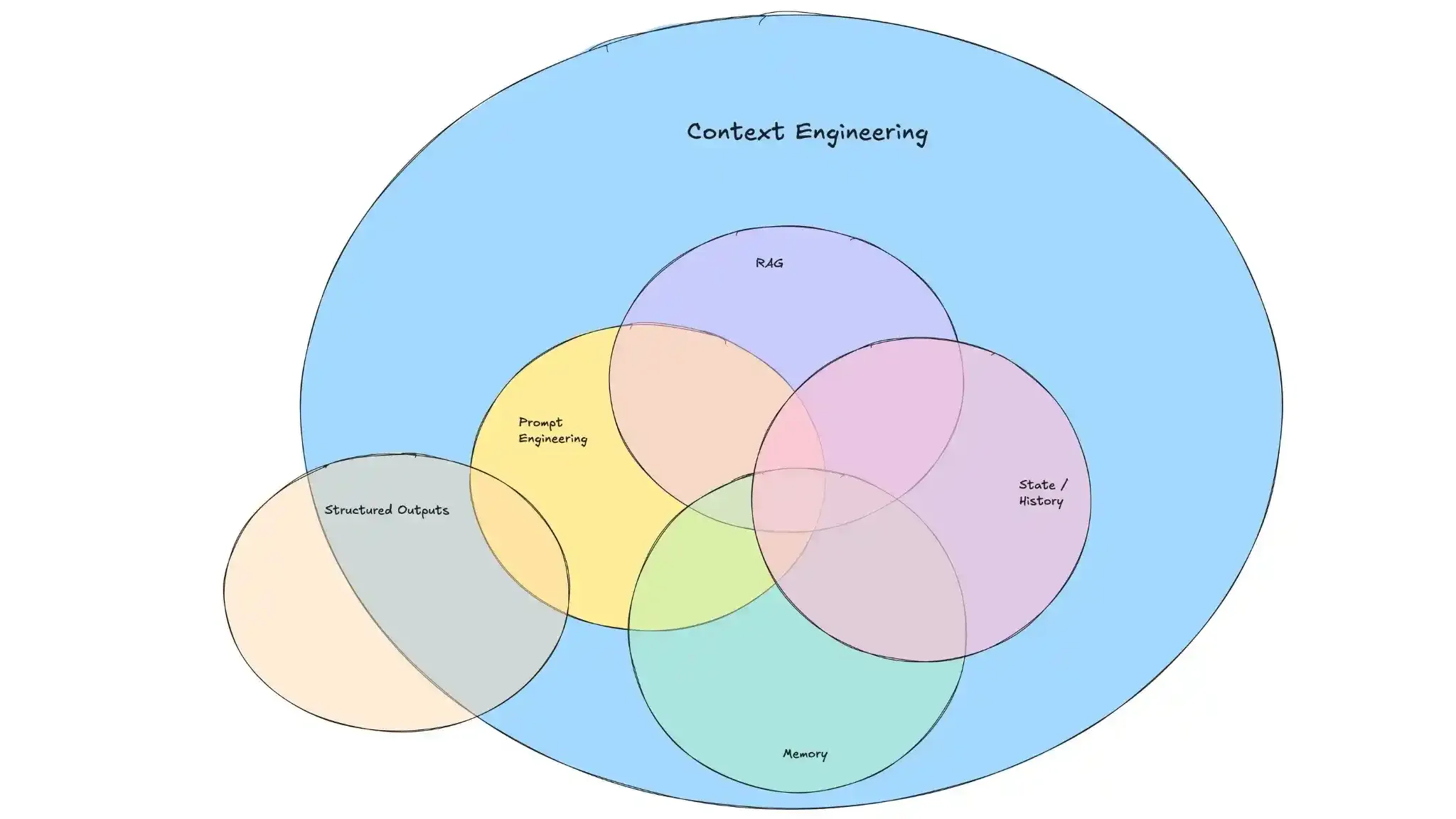

Context Engineering is the practice of designing, structuring, and optimizing the information that a large language model (LLM) uses to understand and perform a task. Unlike traditional prompt engineering — where you simply craft a good instruction — context engineering looks at the entire environment of an AI system: inputs, memory, tools, timing, structure, and more.

Think of it this way: if prompt engineering is like asking a smart assistant a question, context engineering is like setting up the perfect meeting — where the assistant has all the documents, knows the goals, understands the history, and is aware of what tools they can use to help.

Simple Definition:

Context Engineering is the systematic approach to designing, building, and optimizing the information environment that AI models operate within to achieve consistent, reliable, and intelligent outcomes.

- Dynamic information retrieval systems that pull relevant data in real-time

- Memory management that maintains conversation history and learned preferences

- Tool integration that extends AI capabilities beyond text generation

- Output formatting that ensures consistent, structured responses

- Quality assurance mechanisms that validate and improve context quality

Why It’s Becoming Critical

LLMs like GPT-4 or Claude 3 are extremely capable, but their success depends heavily on what and how much they’re given. Give them poor context — irrelevant, outdated, or messy data — and they perform poorly. But when you engineer context correctly, these models become precise, insightful, and even creative.

Where Prompt Engineering Stops, Context Engineering Begins

Let’s break this down.

- Prompt Engineering is crafting a good question or task instruction.

- Context Engineering is designing the full setup: What’s the user’s intent? What past interactions matter? What documents should be included? What tools should be enabled?

A great example is an AI research assistant. Instead of simply asking it, “What’s the latest on AI in healthcare?” — context engineering would involve:

- Providing access to recent academic papers

- Giving a timeframe (e.g., "from the last 2 months")

- Structuring the expected format (e.g., summary → sources → insights)

- Telling the system which subtopics to focus on

- Ensuring it doesn’t repeat what was already covered earlier

The Real Mechanics of Context Engineering

Context Engineering touches several moving parts. Here's how it usually works in modern AI systems:

1. System Instructions

Every AI agent starts with a system prompt — like a role or mindset. But in context engineering, this instruction becomes more detailed and nuanced.

"You are a senior research analyst. Use the user’s query to break the task into subtasks. Prioritize accuracy and diversity of perspectives. Return results in JSON format."

This sets the tone and expectations right from the start.

2. Dynamic User Inputs

The AI isn't just fed static instructions. It may receive:

- Current date and time

- User preferences

- Task history

- Tool configurations

- Memory from past sessions

This makes the output feel coherent, not robotic.

3. Retrieved Knowledge (RAG)

Retrieval-augmented generation (RAG) is a technique where the AI fetches documents from a database or web and uses them to ground its response. Here, selecting what to retrieve — and how to summarize or compress it — is context engineering in action.

4. Structured Outputs

Good context engineers don’t leave formatting to chance. They define clear schemas for output, like:

{

"summary": "...",

"key_sources": ["URL1", "URL2"],

"insights": ["...", "..."],

"confidence_score": 0.94

}

This ensures consistency and downstream usability.

Real-World Example: AI Deep Research Agent

Let’s look at a practical case — an agent designed to research any topic using multiple sources.

- User input: "What are the implications of quantum AI in cybersecurity?"

- System prompt: Defines the agent’s role as a research planner.

- Subtasks created: One for news, one for academic literature.

- Time filters: Past 30 days for news; past 12 months for papers.

- Domain focus: Technology and defense sectors.

- Output format: Structured subtasks with inferred date ranges.

By breaking the task down this way, the AI can search smarter, cite accurately, and provide actionable insights. Without context engineering, it would likely generate a vague or outdated response.

Why Context Engineering Is a Game Changer

- Precision: Better context reduces hallucinations — those made-up facts AI sometimes produces.

- Efficiency: With context filtering, AI uses fewer tokens and costs less.

- Scalability: You can design multi-agent systems where each agent has a specific, optimized context.

- Consistency: Structured outputs enable AI to work inside apps, APIs, and workflows reliably.

In short, it’s not about writing better prompts — it’s about building better thinking environments for the model.

What Many Get Wrong About Context Engineering

Despite its growing importance, many developers overlook these key principles:

- Context isn’t static: It changes per task and should be built dynamically.

- More is not better: Too much data can clutter the model’s understanding.

- Structure matters: Well-organized inputs produce reliable, reusable outputs.

- Evaluation is essential: You need metrics like accuracy, relevance, and cost to measure effectiveness.

Looking Ahead: The Future of Context Engineering

As models get faster and more multimodal, context engineering will extend beyond text. It will involve audio, video, 3D environments, real-time tools, and even emotional states.

There’s also growing interest in automated context builders — systems that learn what context helps most and adapt it on the fly. This is already being explored in LangChain, LlamaIndex, and DAIR.AI’s recent frameworks.

Soon, we won’t just be engineering prompts — we’ll be engineering memory, cognition, and collaboration for AI.

Final Thoughts

Context Engineering is not just a buzzword. It’s the bridge between AI potential and AI performance.

If you’re building tools, workflows, or experiences powered by large models, mastering context engineering isn’t optional — it’s the difference between mediocre automation and truly intelligent systems.

So the next time your AI gives you a half-baked answer, ask yourself: Did I engineer the right context?