The data leak occurred when Microsoft researchers published open-source AI training data on GitHub. They provided a link for users to download the data from an Azure storage account. However, the access token shared in the GitHub repository was overly permissive. It allowed read/write access to the entire storage account rather than just the intended data.

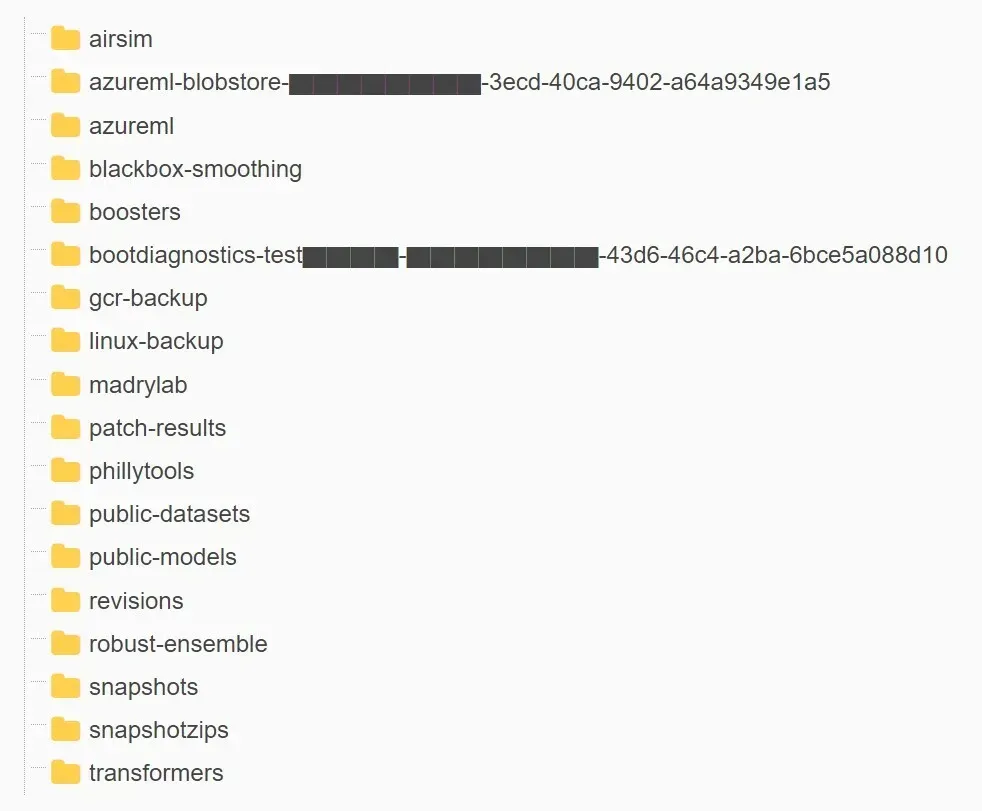

As discovered by Wiz, this account contained 38TB of private Microsoft data including:

- Backups of employee computers, containing passwords, secret keys, and internal Microsoft Teams messages

- Over 30,000 internal Microsoft Teams messages from 359 Microsoft employees.

Microsoft Exposed containers - image-Wiz.io

The root cause was the use of Azure Shared Access Signature (SAS) tokens without proper permissions scoping. SAS tokens allow granular access to Azure storage accounts. However, if configured improperly, they can grant excessive permissions.

In addition to the overly permissive access scope, the token was also misconfigured to allow “full control” permissions instead of read-only. Meaning, not only could an attacker view all the files in the storage account, but they could delete and overwrite existing files as well. – written by Wiz Research Team

According to the Wiz team, in Azure, a Shared Access Signature (SAS) token is a signed URL that grants access to Azure Storage data. The access level can be customized by the user; the permissions range between read-only and full control, while the scope can be either a single file, a container, or an entire storage account.

The expiry time is also completely customizable, allowing the user to create never-expiring access tokens.

In this case, the token provided full control of the storage account instead of read-only access. It also lacked an expiry date, meaning access would persist indefinitely.

There are 3 types of SAS tokens: Account SAS, Service SAS, and User Delegation SAS. Among these most popular type is Account SAS tokens.

Due to a lack of monitoring and governance, SAS tokens pose a security risk, and their usage should be as limited as possible. These tokens are very hard to track, as Microsoft does not provide a centralized way to manage them within the Azure portal.

|

| SAS security risks, Image- Wiz.io |

In addition, these tokens can be configured to last effectively forever, with no upper limit on their expiry time. Therefore, using Account SAS tokens for external sharing is unsafe and should be avoided. - Wiz Research Team further added.

Wiz recommends restricting the use of account-level SAS tokens due to the lack of governance. Additionally, dedicated storage accounts should be used for any external sharing purposes. Proper monitoring and security reviews of shared data are also advised.

Microsoft has since invalidated the exposed SAS token and conducted an internal impact assessment. The company has also acknowledged the incident in its recent blog post.

This case highlights the new risks organizations face when starting to leverage the power of AI more broadly, as more of their engineers now work with massive amounts of training data. As data scientists and engineers race to bring new AI solutions to production, the massive amounts of data they handle require additional security checks and safeguards.